Creator of Datasette and Lanyrd, co-creator of the Django Web Framework.

The RSS's url is : https://simonwillison.net/atom/everything/

1970-01-01 08:00:00

Spam, junk … slop? The latest wave of AI behind the ‘zombie internet’

I'm quoted in this piece in the Guardian about slop:I think having a name for this is really important, because it gives people a concise way to talk about the problem.

Before the term ‘spam’ entered general use it wasn’t necessarily clear to everyone that unwanted marketing messages were a bad way to behave. I’m hoping ‘slop’ has the same impact – it can make it clear to people that generating and publishing unreviewed AI-generated content is bad behaviour.

1970-01-01 08:00:00

NumFOCUS DISCOVER Cookbook: Minimal Measures

NumFOCUS publish a guide "for organizers of conferences and events to support and encourage diversity and inclusion at those events."It includes this useful collection of the easiest and most impactful measures that events can put in place, covering topics such as accessibility, speaker selection, catering and provision of gender-neutral restrooms.

1970-01-01 08:00:00

Fast groq-hosted LLMs vs browser jank

Groq is now serving LLMs such as Llama 3 so quickly that JavaScript which attempts to render Markdown strings on every new token can cause performance issues in browsers.Taras Glek's solution was to move the rendering to a requestAnimationFrame() callback, effectively buffering the rendering to the fastest rate the browser can support.

Via lobste.rs

1970-01-01 08:00:00

Great piece by Drew Breunig: “Imagine having products THIS GOOD and still over-selling them.”

1970-01-01 08:00:00

AI counter app from my PyCon US keynote

In my keynote at PyCon US this morning I ran a counter at the top of my screen that automatically incremented every time I said the words "AI" or "artificial intelligence", using vosk, pyaudio and Tkinter. I wrote it in a few minutes with the help of GPT-4o - here's the code I ran as a GitHub repository.I'll publish full detailed notes from my talk once the video is available on YouTube.

1970-01-01 08:00:00

I rewrote it [the Oracle of Bacon] in Rust in January 2023 when I switched over to TMDB as a data source. The new data source was a deep change, and I didn’t want the headache of building it in the original 1990s-era C codebase.

1970-01-01 08:00:00

Understand errors and warnings better with Gemini

As part of Google's Gemini-in-everything strategy, Chrome DevTools now includes an opt-in feature for passing error messages in the JavaScript console to Gemini for an explanation, via a lightbulb icon.Amusingly, this documentation page includes a warning about prompt injection:

Many of LLM applications are susceptible to a form of abuse known as prompt injection. This feature is no different. It is possible to trick the LLM into accepting instructions that are not intended by the developers.

They include a screenshot of a harmless example, but I'd be interested in hearing if anyone has a theoretical attack that could actually cause real damage here.

Via Hacker News

1970-01-01 08:00:00

Commit: Add a shared credentials relationship from twitter.com to x.com

A commit toshared-credentials.json in Apple's password-manager-resources repository. Commit message: "Pour one out."

1970-01-01 08:00:00

I have seen the extremely restrictive off-boarding agreement that contains nondisclosure and non-disparagement provisions former OpenAI employees are subject to. It forbids them, for the rest of their lives, from criticizing their former employer. Even acknowledging that the NDA exists is a violation of it.

If a departing employee declines to sign the document, or if they violate it, they can lose all vested equity they earned during their time at the company, which is likely worth millions of dollars.

1970-01-01 08:00:00

PSF announces a new five year commitment from Fastly

Fastly have been donating CDN resources to Python—most notably to the PyPI package index—for ten years now.The PSF just announced at PyCon US that Fastly have agreed to a new five year commitment. This is a really big deal, because it addresses the strategic risk of having a key sponsor like this who might change their support policy based on unexpected future conditions.

Thanks, Fastly. Very much appreciated!

1970-01-01 08:00:00

Programming mantras are proverbs

I like this idea from Luke Plant that the best way to think about mantras like "Don’t Repeat Yourself" is to think of them as proverbs that can be accompanied by an equal and opposite proverb.DRY, "Don't Repeat Yourself" matches with WET, "Write Everything Twice".

Proverbs as tools for thinking, not laws to be followed.

Via lobste.rs

1970-01-01 08:00:00

[...] by default Heroku will spin up multiple dynos in different availability zones. It also has multiple routers in different zones so if one zone should go completely offline, having a second dyno will mean that your app can still serve traffic.

1970-01-01 08:00:00

But where the company once limited itself to gathering low-hanging fruit along the lines of “what time is the super bowl,” on Tuesday executives showcased generative AI tools that will someday plan an entire anniversary dinner, or cross-country-move, or trip abroad. A quarter-century into its existence, a company that once proudly served as an entry point to a web that it nourished with traffic and advertising revenue has begun to abstract that all away into an input for its large language models.

1970-01-01 08:00:00

One of the more over-looked announcements from Google I/O yesterday was PaliGemma, an openly licensed VLM (Vision Language Model) in the Gemma family of models.

The model accepts an image and a text prompt. It outputs text, but that text can include special tokens representing regions on the image. This means it can return both bounding boxes and fuzzier segment outlines of detected objects, behavior that can be triggered using a prompt such as "segment puffins".

You can try it out on Hugging Face.

It's a 3B model, making it feasible to run on consumer hardware.

Via Roboflow: PaliGemma: Open Source Multimodal Model by Google

1970-01-01 08:00:00

OpenAI: Managing your work in the API platform with Projects

New OpenAI API feature: you can now create API keys for "projects" that can have a monthly spending cap. The UI for that limit says:If the project's usage exceeds this amount in a given calendar month (UTC), subsequent API requests will be rejected

You can also set custom token-per-minute and request-per-minute rate limits for individual models.

I've been wanting this for ages: this means it's finally safe to ship a weird public demo on top of their various APIs without risk of accidental bankruptcy if the demo goes viral!

Via @romainhuet

1970-01-01 08:00:00

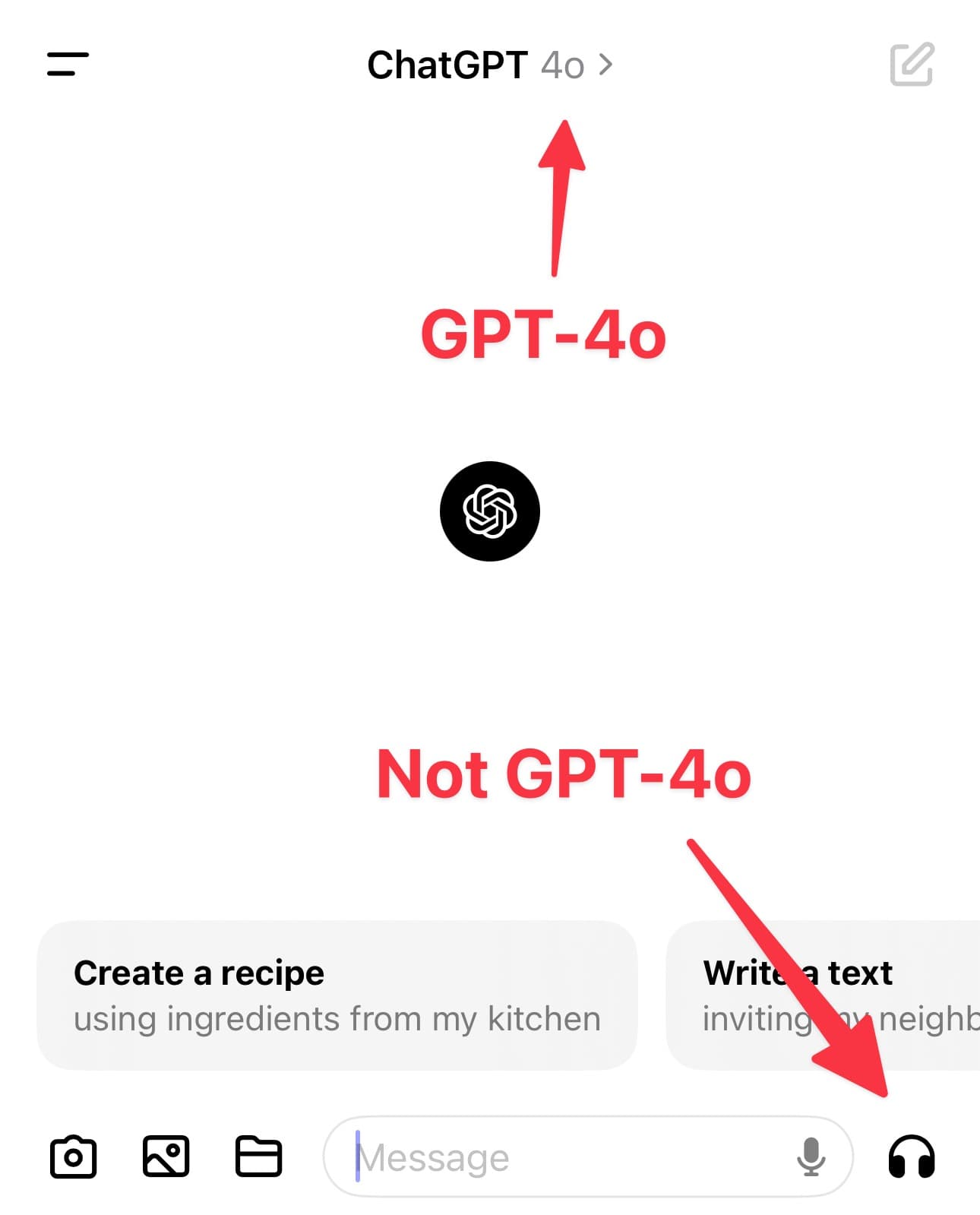

Monday's OpenAI announcement of their new GPT-4o model included some intriguing new features:

They also made the new 4o model available to paying ChatGPT Plus users, on the web and in their apps.

But, crucially, those big new features were not part of that release.

Here's the relevant section from the announcement post:

We recognize that GPT-4o’s audio modalities present a variety of novel risks. Today we are publicly releasing text and image inputs and text outputs. Over the upcoming weeks and months, we’ll be working on the technical infrastructure, usability via post-training, and safety necessary to release the other modalities.

This is catching out a lot of people. The ChatGPT iPhone app already has image output, and it already has a voice mode. These worked with the previous GPT-4 mode and they still work with the new GPT-4o mode... but they are not using the new model's capabilities.

Lots of people are discovering the voice mode for the first time - it's the headphone icon in the bottom right of the interface.

They try it and it's impressive (it was impressive before) but it's nothing like as good as the voice mode in Monday's demos.

Honestly, it's not at all surprising that people are confused. They're seeing the "4o" option and, understandably, are assuming that this is the set of features that were announced earlier this week.

Think about what you need to know in order to understand what's going on here:

GPT-4o is a brand new multi-modal Large Language Model. It can handle text, image and audio input and produce text, image and audio output.

But... the version of GPT-4o that has been made available so far - both via the API and via the OpenAI apps - is only able to handle text and image input and produce text output. The other features are not yet available outside of OpenAI (and a select group of partners).

And yet in the apps it can still handle audio input and output and generate images. That's because the app version of the model is wrapped with additional tools.

The audio input is handled by a separate model called Whisper, which converts speech to text. That text is then fed into the LLM, which generates a text response.

The response is passed to OpenAI's boringly-named tts-1 (or maybe tts-1-hd) model (described here), which converts that text to speech.

While nowhere near as good as the audio in Monday's demo, tts-1 is still a really impressive model. I've been using it via my ospeak CLI tool since it was released back in November.

As for images? Those are generated using DALL-E 3, through a process where ChatGPT directly prompts that model. I wrote about how that works back in October.

So what's going on with ChatGPT's GPT-4o mode is completely obvious, provided you already understand:

tts-1I'm reminded of the kerfluffle back in March when the Google Gemini image creator was found to generate images of Black Nazis. I saw a whole bunch of people refer to that in conversations about the Google Gemini Pro 1.5 LLM, released at the same time, despite the quality of that model being entirely unrelated to Google's policy decisions about how one of the interfaces to that model should make use of the image creator tool.

If you're fully immersed in this world, it's easy to lose track of how incredibly complicated these systems have become. The amount you have to know in order to even understand what that "4o" mode in the ChatGPT app does is very easy to underestimate.

Fundamentally these are challenges in user experience design. You can't just write documentation about them, because no-one reads documentation.

A good starting here is to acknowledge the problem. LLM systems are extremely difficult to understand and use. We need to design the tools we build on top of them accordingly.

On May 16th around 1PM PT OpenAI released a new iPhone app update which adds the following warning message the first time you try to access that headphones icon:

New Voice Mode coming soon

We plan to launch a new Voice Mode with new GPT-4o capabilities in an alpha within ChatGPT Plus in the coming weeks. We'll let you know when you have access.

1970-01-01 08:00:00

If we want LLMs to be less hype and more of a building block for creating useful everyday tools for people, AI companies' shift away from scaling and AGI dreams to acting like regular product companies that focus on cost and customer value proposition is a welcome development.

1970-01-01 08:00:00

Glyph’s tips on making the most out of PyCon. I particularly like his suggestion that “dinners are for old friends, but lunches are for new ones”.

I’m heading out to Pittsburgh tonight, and giving a keynote (!) on Saturday. If you see me there please come and say hi!

Via Lobste.rs

1970-01-01 08:00:00

But unlike the phone system, we can’t separate an LLM’s data from its commands. One of the enormously powerful features of an LLM is that the data affects the code. We want the system to modify its operation when it gets new training data. We want it to change the way it works based on the commands we give it. The fact that LLMs self-modify based on their input data is a feature, not a bug. And it’s the very thing that enables prompt injection.

1970-01-01 08:00:00

The MacBook Airs are Apple’s best-selling laptops; the iPad Pros are Apple’s least-selling iPads. I think it’s as simple as this: the current MacBook Airs have the M3, not the M4, because there isn’t yet sufficient supply of M4 chips to satisfy demand for MacBook Airs.

1970-01-01 08:00:00

Context caching for Google Gemini

Another new Gemini feature announced today. Long context models enable answering questions against large chunks of text, but the price of those long prompts can be prohibitive—$3.50/million for Gemini Pro 1.5 up to 128,000 tokens and $7/million beyond that.Context caching offers a price optimization, where the long prefix prompt can be reused between requests, halving the cost per prompt but at an additional cost of $4.50 / 1 million tokens per hour to keep that context cache warm.

Given that hourly extra charge this isn’t a default optimization for all cases, but certain high traffic applications might be able to save quite a bit on their longer prompt systems.

It will be interesting to see if other vendors such as OpenAI and Anthropic offer a similar optimization in the future.

Via @officiallogank

1970-01-01 08:00:00

A new release of my

llm-gemini plugin adding support for the Gemini 1.5 Flash model that was revealed this morning at Google I/O.

I'm excited about this new model because of its low price. Flash is $0.35 per 1 million tokens for prompts up to 128K token and $0.70 per 1 million tokens for longer prompts - up to a million tokens now and potentially two million at some point in the future. That's 1/10th of the price of Gemini Pro 1.5, cheaper than GPT 3.5 ($0.50/million) and only a little more expensive than Claude 3 Haiku ($0.25/million).

1970-01-01 08:00:00

How developers are using Gemini 1.5 Pro’s 1 million token context window

I got to be a talking head for a few seconds in an intro video for today's Google I/O keynote, talking about how I used Gemini Pro 1.5 to index my bookshelf (and with a cameo from my squirrel nutcracker). I'm at 1m25s.(Or at 10m6s in the full video of the keynote)

1970-01-01 08:00:00

Why your voice assistant might be sexist

Given OpenAI's demo yesterday of a vocal chat assistant with a flirty, giggly female voice - and the new ability to be interrupted! - it's worth revisiting this piece by Chris Baraniuk from June 2022 about gender dynamics in voice assistants. Includes a link to this example of a synthesized non-binary voice.1970-01-01 08:00:00

LLM 0.14, with support for GPT-4o

It's been a while since the last LLM release. This one adds support for OpenAI's new model:llm -m gpt-4o "fascinate me"

Also a new llm logs -r (or --response) option for getting back just the response from your last prompt, without wrapping it in Markdown that includes the prompt.

Plus nine new plugins since 0.13!

1970-01-01 08:00:00

OpenAI announced a new model today: GPT-4o, where the o stands for "omni".

It looks like this is the gpt2-chatbot we've been seeing in the Chat Arena the past few weeks.

GPT-4o doesn't seem to be a huge leap ahead of GPT-4 in terms of "intelligence" - whatever that might mean - but it has a bunch of interesting new characteristics.

First, it's multi-modal across text, images and audio as well. The audio demos from this morning's launch were extremely impressive.

ChatGPT's previous voice mode worked by passing audio through a speech-to-text model, then an LLM, then a text-to-speech for the output. GPT-4o does everything with the one model, reducing latency to the point where it can act as a live interpreter between people speaking in two different languages. It also has the ability to interpret tone of voice, and has much more control over the voice and intonation it uses in response.

It's very science fiction, and has hints of uncanny valley. I can't wait to try it out - it should be rolling out to the various OpenAI apps "in the coming weeks".

Meanwhile the new model itself is already available for text and image inputs via the API and in the Playground interface, as model ID "gpt-4o" or "gpt-4o-2024-05-13". My first impressions are that it feels notably faster than gpt-4-turbo.

This announcement post also includes examples of image output from the new model. It looks like they may have taken big steps forward in two key areas of image generation: output of text (the "Poetic typography" examples) and maintaining consistent characters across multiple prompts (the "Character design - Geary the robot" example).

The size of the vocabulary of the tokenizer - effectively the number of unique integers used to represent text - has increased to ~200,000 from ~100,000 for GPT-4 and GPT-3:5. Inputs in Gujarati use 4.4x fewer tokens, Japanese uses 1.4x fewer, Spanish uses 1.1x fewer. Previously languages other than English paid a material penalty in terms of how much text could fit into a prompt, it's good to see that effect being reduced.

Also notable: the price. OpenAI claim a 50% price reduction compared to GPT-4 Turbo. Conveniently, gpt-4o costs exactly 10x gpt-3.5: 4o is $5/million input tokens and $15/million output tokens. 3.5 is $0.50/million input tokens and $1.50/million output tokens.

(I was a little surprised not to see a price decrease there to better compete with the less expensive Claude 3 Haiku.)

The price drop is particularly notable because OpenAI are promising to make this model available to free ChatGPT users as well - the first time they've directly name their "best" model available to non-paying customers.

Tucked away right at the end of the post:

We plan to launch support for GPT-4o's new audio and video capabilities to a small group of trusted partners in the API in the coming weeks.

I'm looking forward to learning more about these video capabilities, which were hinted at by some of the live demos in this morning's presentation.

1970-01-01 08:00:00

I’m no developer, but I got the AI part working in about an hour.

What took longer was the other stuff: identifying the problem, designing and building the UI, setting up the templating, routes and data architecture.

It reminded me that, in order to capitalise on the potential of AI technologies, we need to really invest in the other stuff too, especially data infrastructure.

It would be ironic, and a huge shame, if AI hype sucked all the investment out of those things.

— Tim Paul

1970-01-01 08:00:00

Fascinating, detailed low-level notes on how to get the most out of NVIDIA's H100 GPUs (currently selling for around $40,000 a piece) from the research team at Stanford who created FlashAttention, among other things.

The swizzled memory layouts are flat-out incorrectly documented, which took considerable time for us to figure out.

Via Hacker News

1970-01-01 08:00:00

It’s still very early days for Mojo, the new systems programming language from Chris Lattner that imitates large portions of Python and can execute Python code directly via a compatibility layer.

Ferdinand Schenck reports here on building a PNG decoding routine in Mojo, with a detailed dive into both the PNG spec and the current state of the Mojo language.

Via Hacker News

1970-01-01 08:00:00

About ARDC (Amateur Radio Digital Communications)

In ham radio adjacent news, here's a foundation that it's worth knowing about:ARDC makes grants to projects and organizations that are experimenting with new ways to advance both amateur radio and digital communication science.

In 1981 they were issued the entire 44.x.x.x block of IP addresses - 16 million in total. In 2019 they sold a quarter of those IPs to Amazon for about $100 million, providing them with a very healthy endowment from which they can run their grants program!